Algorithms Don’t Feel — But They Decide

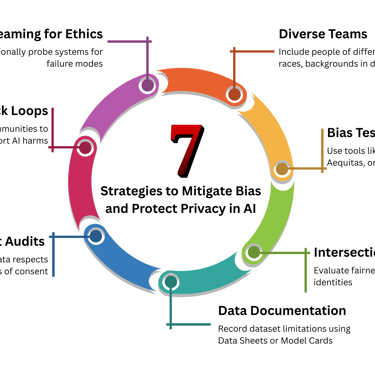

Discover 7 powerful, real-world strategies to reduce bias and protect privacy in AI systems. This human-centered article explores how diverse teams, bias testing tools, data transparency, and ethical red teaming can create more inclusive, trustworthy, and accountable AI. Learn from real examples and best practices used by leading organizations to build fair, responsible AI that puts people first.

DATA PRIVACY IN THE AGE OF AI

Nivarti Jayaram

6/16/20253 min read

“AI won’t wake up in the middle of the night worried about hurting someone. But the people it affects will.”

A few years ago, a father walked into a Target store, furious.

Why was his teenage daughter receiving coupons for baby products?

Turns out, Target’s algorithm had accurately predicted her pregnancy based on her shopping patterns — before she had told anyone. Even before she had fully acknowledged it herself.

The model wasn’t malicious. It was just data-driven.

But the experience? Deeply human. Deeply personal.

And that’s the paradox we now face:

Algorithms don’t feel. But they decide — about credit scores, hiring, healthcare, bail eligibility, school admissions, and so much more.

The impact? Often profound.

The harm? Often invisible.

The accountability? Often missing.

So how do we fix it?

Let’s start here: We don’t need perfect algorithms. We need human-aware ones.

Fairness Isn't a Feature — It's a Practice

Here are seven strategies being used by forward-thinking organizations to make AI fairer, safer, and more respectful of the people it affects:

1. Build with Diverse Teams

“If everyone in the room looks the same, you’ve already built bias in.”

A facial recognition system tested by MIT Media Lab had an error rate of <1% for white men…...and over 34% for Black women.

Why?

Because the people building and testing it didn’t reflect the world it was meant to serve.

Diverse teams — across race, gender, disability, language, class — don’t just check a box. They expand the lens. They see harm before it scales. They build inclusion into design, not as an afterthought.

2. Test for Bias (Like You Test for Bugs)

Tools like Fairlearn, IBM AI Fairness 360, and Aequitas help developers check whether models are disadvantaging certain groups.

Amazon famously built an AI to screen resumes — but it learned from 10 years of biased data. It downgraded resumes that included any mention of the word "women."

The lesson?

Bias in = Bias out. But you won’t know unless you test for it.

3. Think Intersectionally

A system might be fair to women and fair to people of color — but not fair to women of color. Fairness isn’t one-dimensional. It’s layered.

Models must be tested not just across singular categories but across intersections of identity — because injustice compounds.

4. Document Your Data’s Story

Every dataset has a backstory. How it was collected. Who it excludes. What it assumes. Using tools like Model Cards or Datasheets for Datasets, we make that story visible.

It’s like ingredient labels on food — transparency matters. If we can’t trace the ingredients of our AI, we shouldn’t trust the recipe.

5. Audit Consent — Not Just Collection

A lot of AI systems don’t just use the data you gave — they infer what you didn’t. That’s where consent becomes murky.

Just because a system can infer your pregnancy, religion, or sexuality doesn’t mean it should.

Consent isn’t just a checkbox. It’s ongoing, specific, and revocable.

6. Build Inclusive Feedback Loops

A truly ethical system makes it easy for people to speak up — and for designers to listen. When a chatbot, credit app, or recommendation engine causes harm, communities should be able to report it without legalese, defensiveness, or shame.

Ethics isn’t static. It evolves. And feedback is your moral telemetry.

7. Red Team for Ethics

“Design for misuse, not just use.”

We red team for cybersecurity. Why not for social risk?

Facebook’s own internal red teams predicted that its recommendation algorithm would amplify outrage and division.

They were right. And they were ignored.

Red teaming for ethics means intentionally probing for failure modes — before the real world does it for you.

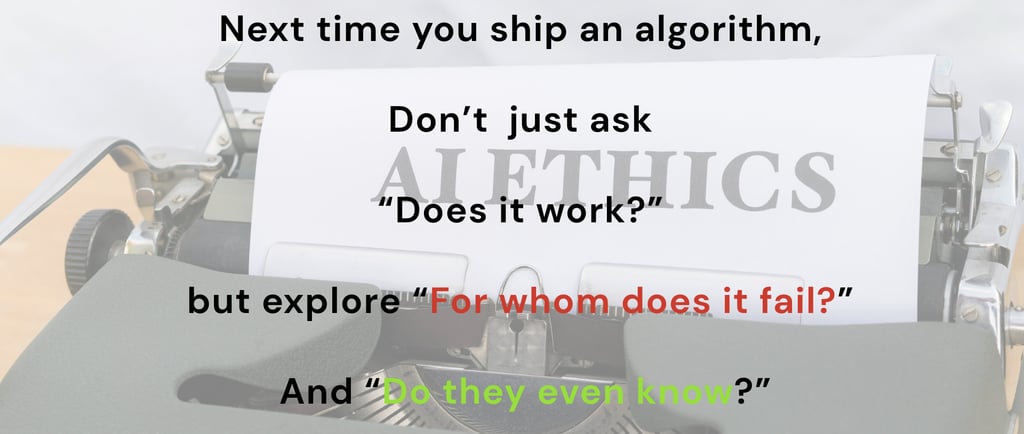

What This Means for All of Us

The algorithms aren’t evil. They’re indifferent. And that’s what makes them dangerous.

But we aren’t indifferent. We can build AI that doesn’t just scale intelligence — but scales integrity.

Not by making it perfect. But by making it accountable, transparent, and inclusive.

Because when an algorithm decides something about you — whether it’s your identity, your opportunities, or your worth — you deserve to know:

Why it decided what it did

What data it used

And whether you can question it

“Fair AI isn’t just technically correct. It’s ethically courageous.”

Let’s build that kind of AI. Together.

What’s your experience with AI systems that felt unfair — or empowering?

What’s working in your organization when it comes to responsible AI?

Who needs to be at the table for your next AI design decision?

Drop your thoughts. Tag someone who’s building AI. Start the conversation that creates change.

#EthicalAI #PrivacyByDesign #HumanCenteredAI #DigitalTrust