Human + Machine Decisioning: A Framework for Shared Intelligence

"Human + Machine Decisioning: A Framework for Shared Intelligence" explores how organizations can architect intelligent decision pipelines that combine human judgment, AI-driven insights, and continuous feedback. Learn when to automate, when to augment, and how to govern decisions in a GenAI-driven world. Discover practical frameworks for defining decision ownership, calibrating trust in machine learning, and leading with clarity, empathy, and accountability.

Nivarti Jayaram

10/14/20256 min read

The Future of Leadership Isn’t Human vs. AI — It’s Human with AI

Let’s start with an uncomfortable truth:

We’ve never had more data — or more doubt.

Every day, leaders are surrounded by dashboards that glow with predictions, probabilities, and patterns.

AI systems whisper answers with 98% confidence, and yet many leaders still lie awake wondering — did we make the right call?

That tension between trusting the machine and trusting ourselves is the defining leadership challenge of our time.

Generative AI and machine learning promise speed, scale, and precision. But leadership has never been about speed — it’s about sense. And as Brene Brown reminds us, “Clarity is kindness.”

So let’s bring clarity — and kindness — to how humans and machines can make better decisions together.

The Myth of the Binary Choice

When it comes to AI, most conversations start with a false choice:

Do we automate this or not?

Do we trust the model or our gut?

Is this a human decision or a machine decision?

But here’s the truth — it’s rarely either/or. It’s almost always both/and.

We don’t need machines to replace our intelligence; we need them to extend it.

When designed right, AI doesn’t reduce human judgment — it refines it.

This is the essence of shared intelligence:

A partnership between human intuition and machine computation, built on feedback, governance, and trust.

The Evolution of Decision-Making

For centuries, decision-making was linear and hierarchical. A few people at the top had access to information, and everyone else followed.

Then came the data era — dashboards, analytics, KPIs — suddenly everyone had information, but not everyone had insight.

Now, in the GenAI era, information isn’t just available — it’s predictive.

The question isn’t “What happened?” It’s “What might happen next?”

And that’s where complexity multiplies. Because when probability enters the room, certainty leaves.

Why Human Judgment Still Matters

AI models are extraordinary pattern recognizers.

They can process millions of data points, run simulations, and predict outcomes with astonishing accuracy.

But here’s what they can’t do:

They can’t see context beyond their data boundaries.

They can’t weigh values when choices have moral trade-offs.

They can’t understand emotion — fear, hope, empathy, trust — that drives human systems.

As Adam Grant writes, “The smarter you are, the more you need others to challenge your thinking.” The same applies to AI. The smarter our models get, the more they need human context to stay grounded in meaning.

Human judgment provides narrative coherence — the story that numbers alone can’t tell.

Why “Shared Intelligence” Matters Now

Every decision we make sits on a continuum — between intuition and evidence, between gut feel and model output. But most enterprises today are still architected for a binary world: either the human decides or the system does.

That’s outdated.

In the age of Generative AI and autonomous systems, we need decision architectures that blend strengths:

Machines that can see patterns across billions of data points

Humans who can interpret context, ethics, and empathy

When these capabilities converge, decisions become not only faster but also wiser. But it requires intentional design — not blind automation.

The Anatomy of a Human + Machine Decision Pipeline

Think of a modern decision as a pipeline, not a point in time.

It flows through five distinct layers:

Sensing Layer — What’s Happening?

Data streams, models, sensors, and dashboards that continuously monitor and detect signals.AI role: Identify anomalies, correlations, and trends at scale.

Human role: Interpret the signal’s meaning within business or social context.

Framing Layer — What’s the Question?

Before we decide, we must frame what’s at stake.AI role: Suggest possible problem statements or options based on data.

Human role: Validate which question matters and aligns with purpose and values.

Reasoning Layer — What Are the Options?

AI role: Generate options using models, scenarios, or simulations.

Human role: Weigh ethical trade-offs, externalities, and stakeholder implications.

Decision Layer — What Will We Do?

AI role: Provide probabilities, risk scores, and potential outcomes.

Human role: Make the final call when consequences are strategic, moral, or irreversible.

Feedback Layer — What Happened & What Will We Learn?

AI role: Measure outcomes, recalibrate models, and close the learning loop.

Human role: Reflect, adapt governance, and learn from near misses or unexpected consequences.

When we architect decisioning like this — as a continuous, co-evolving process — we build systems that learn with us, not just for us.

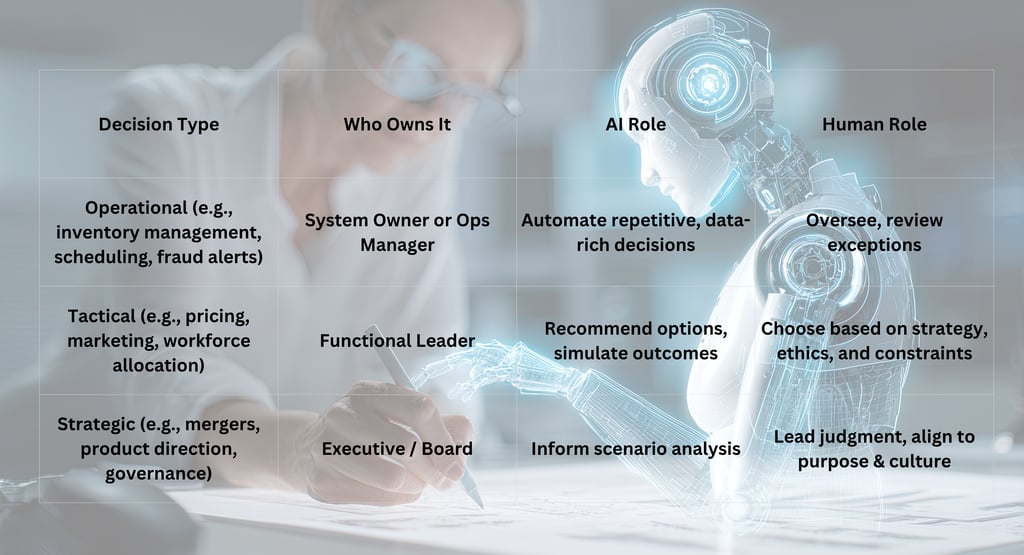

Decision Ownership: Who Decides What?

Shared intelligence doesn’t mean shared accountability.

Every decision still needs a clear owner — a person (or role) who is responsible for the final judgment and outcome.

Here’s a simple way to think about ownership across three domains:

The “Trust Calibration” Framework

Trust in AI isn’t binary — it’s dynamic. We don’t either trust or distrust; we calibrate trust based on confidence, context, and consequence.

To calibrate trust, leaders can use three lenses:

Confidence: How reliable are the data and models?

High data quality, well-trained model → higher trust threshold

Sparse or biased data → manual validation required

Context: What’s the decision’s domain?

Routine, high-volume → lean toward automation

Complex, ambiguous, value-driven → lean toward human judgment

Consequence: What’s the impact of being wrong?

Low impact → allow model-led execution with oversight

High impact → require human validation, moral reasoning, and governance review

A good leader doesn’t just “trust the model” — they interrogate the model:

“What are you optimizing for?

Who defines success?

What are you blind to?”

That’s adaptive leadership in action.

Where AI Ends and Humanity Begins

There’s a growing danger of over-relying on machine outputs — not because the models are evil, but because they’re seductive. AI gives us confidence, clarity, and speed — things humans crave when uncertainty looms.

But here’s the truth: AI doesn’t understand meaning; it understands math. Machines don’t feel responsibility or regret. They don’t weigh fairness or compassion. They predict, but they don’t care.

That’s why human oversight isn’t a compliance checkbox — it’s a moral compass.

As Brené Brown says: “Clear is kind. Unclear is unkind.”

Clarity in AI governance means being explicit about:

When the machine decides

When the human intervenes

How we hold each accountable

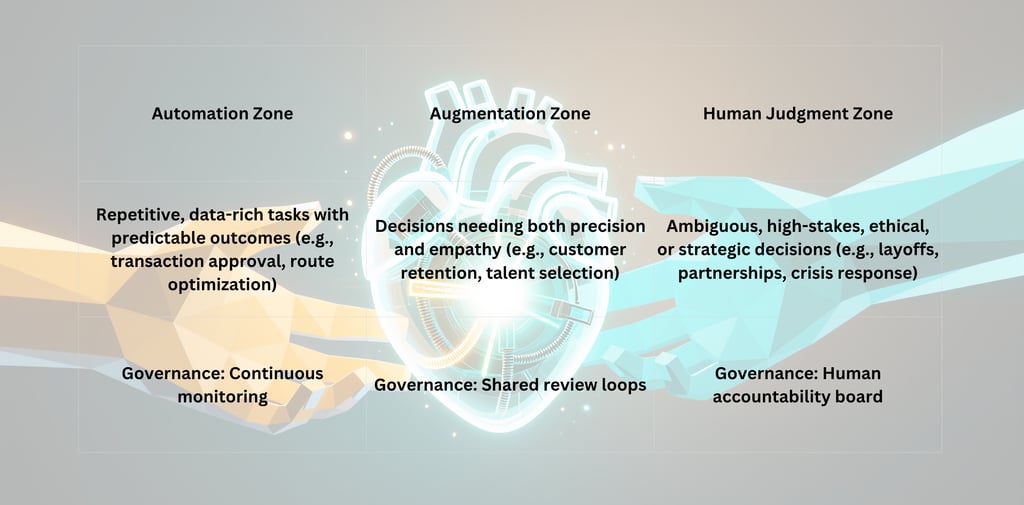

When to Automate, When to Augment, When to Pause

To operationalize shared intelligence, leaders need a decision triage system.

The magic isn’t in choosing one zone — it’s in designing seamless transitions between them. That’s where trust, transparency, and human adaptability come alive.

Feedback Loops: The Soul of Shared Intelligence

Every decision — human or machine — creates data.

The most adaptive organizations treat those outcomes as living feedback loops:

Did we get the result we expected?

What signals did we miss?

How did bias show up in the outcome?

Machine learning systems improve with retraining.Human systems improve with reflection.

Combine both, and you get collective intelligence — a learning organism where technology and humanity grow together.

The New Skill Set of the Human + Machine Leader

The next generation of leaders won’t just manage teams — they’ll manage systems of intelligence.

Here’s what they’ll need to cultivate:

AI Literacy – Understanding models, data biases, and algorithmic reasoning.

Ethical Foresight – Seeing the unintended consequences of automation.

Systems Thinking – Connecting data flows, human workflows, and organizational outcomes.

Psychological Safety – Creating environments where humans feel safe to question the model.

Decision Governance – Building frameworks for accountability, calibration, and trust.

Adaptive Sensemaking – Continuously aligning actions with changing data, context, and purpose.

As Adam Grant says:

“The hallmark of wisdom is knowing when to rethink.”

The leaders who thrive in this age will not be those who know all the answers — but those who are skilled at asking better questions of both people and machines.

The Human Imperative in a Machine World

Shared intelligence isn’t about replacing the human mind — it’s about amplifying it.

The future of leadership isn’t about commanding algorithms — it’s about collaborating with them.

AI will keep getting smarter. Our real challenge is to ensure we do too. Because when we combine the machine’s capacity to predict with the human capacity to care, we don’t just make faster decisions — we make better ones.

Ones that honor both intelligence and integrity.

Ones that build not only profit — but trust.

Final Reflection:

The question for leaders isn’t “Will AI take my job?”

It’s:

“Am I learning fast enough to lead in partnership with intelligence — not in competition with it?”

That’s the essence of shared intelligence. That’s the next evolution of leadership.